For years, digital marketers have navigated Google’s Performance Max campaigns with a blend of sophisticated strategy and unavoidable intuition, especially when it came to deciphering the true impact of their creative assets. The platform’s powerful automation often meant sacrificing granular control, leaving advertisers to wonder which specific images, videos, or headlines were genuinely moving the needle. Now, a pivotal shift is underway as Google introduces a native A/B testing framework directly within Performance Max, finally offering a data-backed solution to the persistent challenge of creative validation. This development promises to replace ambiguity with analytical certainty, equipping advertisers with the tools to methodically prove the value of their creative choices.

Is Your Performance Max Creative Strategy More Guesswork Than Gospel?

Advertisers frequently face the dilemma of attributing campaign success to specific creative elements within the automated environment of Performance Max. Without a controlled testing mechanism, determining whether a new video ad or a refreshed headline was responsible for an uplift in conversions was a matter of correlation rather than causation. This ambiguity often led to creative refresh cycles based on broad assumptions or incomplete data, hindering the ability to build a reliable, iterative optimization strategy.

The introduction of Google’s built-in experiment feature provides the long-awaited answer to this uncertainty. By allowing advertisers to run structured, head-to-head tests between different sets of creative assets within the same campaign, the new tool eliminates the guesswork. This feature directly addresses a core pain point for performance marketers, transforming creative optimization from an art of educated guessing into a science of data-driven decision-making.

The Evolution of PMax: From a Black Box to a Testable Powerhouse

Historically, Performance Max campaigns were often perceived as a “black box,” where inputs were provided and results were delivered with limited insight into the machine’s internal workings. The lack of controlled, in-platform testing capabilities for creatives was a significant part of this challenge. Advertisers had to resort to less reliable methods, such as running separate campaigns or analyzing performance shifts before and after a creative update, both of which introduced numerous external variables that could skew results.

This latest update is part of a broader, encouraging trend from Google toward providing more transparency and control within its most automated solutions. By embedding a robust experimentation framework into PMax, Google acknowledges the need for advertisers to have deeper, more reliable insights. This move signals a maturation of the platform, evolving it from a purely automated engine into a sophisticated powerhouse that combines the benefits of machine learning with the strategic oversight and validation required by expert marketers.

Unpacking the New Experiment Framework: How It Works and Why It Is a Game Changer

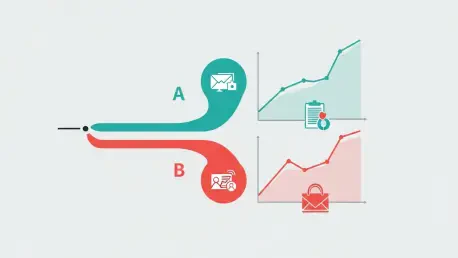

The mechanics of the new native A/B testing feature are straightforward yet powerful. Within a single Performance Max asset group, an advertiser can designate their existing assets as the “control” group and a new set of creatives as the “treatment” group. The system then splits the asset group’s traffic—typically on a 50/50 basis—between these two sets, ensuring a true, apples-to-apples comparison under identical campaign conditions.

This ability to isolate the creative variable is the feature’s most significant benefit. By running the experiment within the same campaign, asset group, and auction environment, advertisers can eliminate the noise that contaminates other testing methods. Factors like budget, targeting, and seasonality affect both the control and treatment groups equally. Consequently, any observed difference in performance can be attributed directly to the creative assets being tested, providing clean, actionable data to inform future strategy.

Early Insights and Expert Takes From the Field

The beta version of this feature was first brought to the industry’s attention by a Google Ads expert who shared findings on social platforms, highlighting the community-driven nature of discovery in digital marketing. This early look provided a valuable first glimpse into the tool’s practical application and potential impact, sparking immediate interest and discussion among performance marketing professionals who have long sought such a capability.

Initial observations from these early tests offer crucial lessons for advertisers. A key finding suggests that experiments running for less than three weeks may yield unstable or inconclusive results, particularly for accounts with lower conversion volumes. To ensure statistical significance, a longer duration is recommended. This underscores the importance of patience in testing, allowing the algorithm sufficient time to gather data and deliver reliable performance metrics that can confidently guide major creative decisions.

A Practical Guide: Your First PMax Creative A/B Test

To begin your first experiment, the initial step is to define a clear hypothesis. Select a high-priority campaign and asset group, then craft a distinct set of “treatment” assets to test against your “control” group. For example, your hypothesis might be that lifestyle-oriented imagery will outperform product-focused shots, or that a value-driven headline will generate more conversions than one focused on features.

Once the test is configured, adhering to best practices is essential for a reliable outcome. It is highly recommended to run the experiment for a sufficient duration, allowing for weekly conversion cycles and data fluctuations to normalize. Critically, advertisers should avoid making other significant changes to the campaign, such as adjusting budgets or bidding strategies, while a test is active. Maintaining a stable environment ensures that the creative assets are the only variable being measured, leading to trustworthy results. After the experiment concludes, the final step involves interpreting the performance data and decisively applying the winning creative set to the entire asset group.

The Future of PMax: Data Driven Creativity at Scale

The integration of native A/B testing represented a fundamental shift in how advertisers approached Performance Max, moving the platform further away from its opaque origins toward a more transparent and strategically manageable tool. By providing a reliable method to quantify the impact of creative work, Google empowered marketers to justify creative investments and systematically refine their messaging based on empirical evidence rather than intuition. This development enabled teams to build a scalable, data-first creative strategy.

This capability not only enhanced campaign performance but also fostered a more collaborative and effective relationship between media buyers and creative teams. The clear, unambiguous data generated by these experiments created a common language for both sides, allowing for more productive conversations about what works and why. The introduction of this feature marked a significant milestone, solidifying Performance Max’s role as a central component of a modern, data-informed marketing organization.