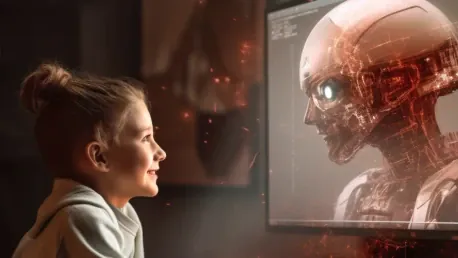

The rapid advancement of artificial intelligence (AI) has brought about significant changes in our daily lives, revolutionizing how we interact, communicate, and even make decisions. However, among the vast opportunities presented by AI, there lies a darker side that cannot be ignored: the potential for abuse, especially with generative AI. This article takes a deep dive into the burgeoning risks associated with AI-generated content and explores various strategies to mitigate these risks through comprehensive education and empowerment initiatives. As we witness AI’s growing integration into society, from entertainment to security, ensuring online safety becomes paramount.

The Rise of AI and Growing Concerns

The utilization of AI has seen a substantial increase, with global AI users rising from 39% in 2023 to 51% in 2025. This surge in AI adoption highlights its expanding presence in various aspects of life, from personal gadgets to large-scale industrial applications. However, this growth has also led to heightened concerns about the potential misuse of generative AI. According to Microsoft’s ninth Global Online Safety Survey, 88% of individuals expressed worry about AI-generated content, reflecting an increase from 83% the previous year, signaling growing public awareness and caution.

One of the primary challenges users face is the difficulty in identifying AI-generated content, which can be indistinguishable from genuine human-created content. The survey revealed that 73% of respondents found it challenging to spot AI-generated images, and only 38% of the images were correctly identified. This difficulty exacerbates the risks associated with abusive AI content, making it crucial to address these concerns effectively. As AI continues to evolve and improve in mimicking human behavior and creation, the task of discerning between real and AI-generated content will become increasingly complex, necessitating robust solutions.

Microsoft’s Commitment to Responsible AI Advancement

Microsoft is dedicated to advancing AI responsibly and has implemented various measures to build a robust safety architecture. The company emphasizes the importance of safeguarding services from abuse and has adopted a comprehensive approach to address harmful content creation. Public awareness and education are key components of this strategy, with a particular focus on media literacy and responsible AI use. By fostering a deeper understanding of AI and its potential risks, Microsoft seeks to empower users to make informed decisions and navigate the digital landscape safely.

To tackle the issue of harmful AI-generated content, Microsoft has introduced several educational and empowerment initiatives. These initiatives aim to equip individuals with the knowledge and skills needed to safely interact with AI technologies. By proactively engaging with the community, Microsoft aspires to instill a sense of responsibility among AI users while promoting the advantages of these technologies. This two-pronged approach ensures that users are not only aware of the potential risks but also understand how to leverage AI positively and safely.

Educational Initiatives and Partnerships

One significant partnership is with Childnet, a leading UK organization focused on child internet safety. Through this collaboration, Microsoft is developing educational materials to prevent the misuse of AI, such as the creation of deepfakes. These resources are designed for schools and families, helping to protect children from online risks and promoting responsible AI use. By disseminating these materials, Microsoft aims to arm educators and parents with the tools necessary to teach children about the potential dangers and ethical considerations of AI.

Additionally, Microsoft has launched the “CyberSafe AI: Dig Deeper” educational game within Minecraft and Minecraft Education. This interactive game teaches young users about the responsible use of AI through engaging adventures. Players are challenged to solve puzzles and ethical dilemmas related to AI, fostering a deeper understanding of digital safety. Although the game does not involve direct interaction with generative AI, it simulates scenarios that convey important lessons about responsible AI usage. By leveraging popular and interactive platforms like Minecraft, Microsoft can reach younger audiences effectively, embedding crucial digital safety skills in their tech-savvy minds.

Tailored Resources for Older Adults

Recognizing the unique needs of older adults, Microsoft has partnered with Older Adults Technology Services (OATS) from AARP to create resources specifically tailored for individuals aged 50 and above. This partnership includes an AI guide that provides insights into the benefits and risks of AI, along with safety tips. Training for OATS call center staff to address AI-related inquiries is also part of this initiative, aimed at boosting older adults’ confidence in navigating AI technology and identifying potential scams. By addressing the specific concerns of this demographic, Microsoft aims to create a more inclusive and secure digital environment for all users.

These tailored resources are designed to empower older adults, ensuring they have the knowledge and tools needed to stay safe online. Whether it’s understanding how to spot fake news, identifying scams, or learning the basics of AI technology, the goal is to reduce the digital divide and ensure older adults can participate in the digital world confidently. By educating a broader section of the population, Microsoft seeks to create a more informed, vigilant, and safe online community.

Insights from the Global Online Safety Survey

The Global Online Safety Survey provides valuable insights into the challenges and concerns associated with AI-generated content. New questions were introduced to gauge people’s ability to identify AI-generated content, using images from Microsoft’s “Real or Not” quiz. Respondents assessed their confidence in spotting deepfakes before and after viewing a series of images, revealing that 73% found it challenging to identify AI-generated images. This profound difficulty underscores the need for continued efforts in education and technological support to assist users in recognizing AI creations.

Among the common concerns about generative AI were scams (73%), sexual or online abuse (73%), and deepfakes (72%). These findings highlight the broad spectrum of potential harms posed by AI-generated content and the importance of addressing these risks holistically. Educating users about the signs and tactics used in AI scams and abuse scenarios is essential in fostering a robust defense against such threats. Continuous monitoring and updating of the safety approach are required to keep up with the rapid innovations within the AI field.

Balancing Safety and Human Rights

The rapid rise of artificial intelligence (AI) is dramatically transforming our everyday lives, reshaping how we interact, communicate, and make decisions. This technological revolution, particularly with generative AI, brings remarkable opportunities but also harbors a darker potential for misuse. This article delves deeply into the emerging risks associated with AI-generated content and examines ways to address these dangers through thorough education and empowerment initiatives. As AI continues to embed itself into various facets of society, spanning from entertainment to security, the importance of ensuring online safety cannot be overstated. The potential for AI to be exploited for malicious purposes, including the creation of fake news, deepfakes, and other misleading content, underscores the need for vigilance. Therefore, it is crucial to implement robust strategies that involve raising awareness, educating users, and promoting ethical AI use. By doing so, we can harness AI’s benefits while minimizing its risks and fostering a safer, more informed digital landscape.