A peculiar phenomenon has taken hold in the digital marketing world, one where companies are meticulously crafting web content that no human user will ever intentionally see. This strategy, born from the rapid ascent of AI-driven search, involves creating stripped-down, machine-readable web pages designed exclusively for Large Language Models (LLMs). This approach represents a significant gamble, betting that a simplified format can win favor with AI crawlers and secure coveted citations in AI-generated answers. This analysis dissects the logic, widespread adoption, and ultimate effectiveness of this controversial trend, exploring whether it is a legitimate new frontier in SEO or a misguided detour in the age of artificial intelligence.

The Rise and Mechanics of a New SEO Frontier

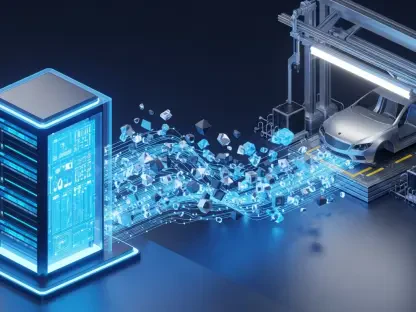

The strategic impulse to cater directly to AI crawers is rooted in a compelling, if unproven, theory. As AI Overviews and conversational search interfaces began to dominate information discovery, marketers and developers hypothesized that complexity was the enemy of machine comprehension. The core idea was that by removing the elements designed for human interaction—intricate styling, dynamic JavaScript, advertisements, and complex navigation—they could present a pure, unadulterated stream of information for AI systems. This, in turn, was believed to make the content easier to parse, process, and ultimately cite as an authoritative source.

This theory did not remain in the realm of speculation for long. Starting in 2026, the adoption of LLM-only pages gained significant momentum, spreading rapidly across the technology, Software-as-a-Service (SaaS), and e-commerce sectors. Companies, eager to maintain their visibility in a shifting search landscape, began investing resources into creating parallel digital footprints invisible to their customers but fully exposed to AI. It became a quiet arms race to see who could become the most “machine-friendly,” leading to the development of several distinct and widely implemented tactics.

The Theory and Adoption of LLM-Only Pages

The fundamental premise driving this trend is the belief that machine readability directly translates to higher AI visibility. By offering a simplified path to information, proponents argue that a website can lower the computational cost for an AI to extract key facts, thereby increasing its chances of being selected as a source over a more complex competitor. This logic suggests that an LLM, faced with a choice between a JavaScript-heavy, ad-laden webpage and a clean, text-only markdown file containing the same information, would naturally favor the latter for its efficiency and clarity.

Consequently, this strategy has been embraced by a diverse range of businesses scrambling to optimize for this new paradigm. Early adopters in the tech documentation space saw it as a logical extension of their content philosophy, aiming to make complex technical information as accessible as possible. E-commerce giants followed, hoping to feed product specifications directly into AI systems to answer consumer queries. The trend represents a fundamental shift in thinking, where for the first time, a significant portion of a site’s content strategy is aimed not at a human audience, but at an algorithmic one.

Four Primary Tactics in Practice

One of the earliest and simplest implementations is the llms.txt file. Inspired by a proposal from AI researcher Simon Willison in 2024, this tactic involves placing a simple markdown file in a website’s root directory. This file serves as a curated guide for AI systems, typically containing a brief project description and a structured list of links to the most critical pages, such as API documentation or product guides. Prominent technology companies like Stripe and Cloudflare have adopted this method to provide a clear, organized entry point to their extensive technical libraries.

Another popular approach is the creation of markdown page duplicates. This strategy involves generating a .md version of a standard HTML page, effectively stripping it down to its textual core with only basic formatting. The goal is to provide an unadulterated content source that an AI can consume without needing to render CSS or execute JavaScript. This method offers a direct, uncluttered alternative for AI crawlers, operating on the assumption that less is more when it comes to machine parsing.

A more comprehensive and resource-intensive tactic is the development of dedicated /ai or /llm directories. In this scenario, companies build entire “shadow” versions of their websites designed exclusively for machine consumption. These directories often mirror the structure of the human-facing site but present the information in a radically simplified format, sometimes containing more detailed data or uniquely structured content not available on the main pages. This approach represents the deepest commitment to the LLM-only philosophy, creating a parallel infrastructure for AI crawlers.

Finally, e-commerce and SaaS companies have increasingly turned to JSON metadata feeds. This technique involves creating structured data files that contain clean, organized information about products, including specifications, pricing, and availability. Companies like Dell use this method to provide a direct, unambiguous data source for AI systems. The intent is to allow an AI to answer specific, data-driven queries without needing to interpret marketing copy or navigate a complex user interface, feeding it the raw facts directly.

Debunking the Myth: Data-Driven Insights and Official Stances

Despite the logical appeal and growing adoption of these tactics, an overwhelming consensus has emerged from extensive research and official industry statements: the specific format of LLM-only pages is largely irrelevant. The most critical factor determining whether an AI cites a piece of content is not its simplicity but its substance. The presence of unique, high-quality, and genuinely useful information that cannot be found elsewhere remains the single most powerful driver of AI citation, regardless of whether it is presented in clean markdown or complex HTML.

This conclusion is not based on opinion but on rigorous, data-driven analysis from across the industry. Multiple independent studies have scrutinized the effectiveness of these specialized formats, and their findings consistently point away from formatting tricks and back toward content quality. This growing body of evidence presents a strong counter-narrative to the LLM-only trend, suggesting that the resources invested in creating these machine-facing pages may be profoundly misplaced.

Key Research Findings

A pivotal analysis conducted by researcher Malte Landwehr examined nearly 18,000 AI citations across several websites employing LLM-only strategies. The results were telling. Formats like llms.txt and .md pages accounted for a negligible percentage of citations, registering at 0.03% and 0%, respectively. In contrast, the modest success observed with dedicated /ai directories or JSON files was directly and exclusively tied to those assets containing unique information—such as more detailed specifications or specific data points—that was not available on the main website. The format itself provided no advantage; the unique content did.

Further reinforcing this conclusion, a large-scale study by SE Ranking analyzed 300,000 domains to find a correlation between the presence of an llms.txt file and AI citation frequency. The study’s machine learning model determined that the existence of the file was not a helpful predictor of citation success. In fact, the model’s accuracy improved when the llms.txt variable was removed, leading the researchers to classify its presence as mere statistical “noise.” This large-scale data confirms that AI systems are not actively seeking out or rewarding these specialized files.

Official Rejection from Major Platforms

The data from independent researchers is strongly corroborated by explicit statements from the major technology platforms themselves. Representatives from Google, including John Mueller and Gary Illyes, have publicly stated that the company does not use llms.txt files and has no plans to implement them. Mueller went so far as to compare the trend to the long-obsolete “keywords meta tag,” another once-popular SEO tactic that search engines have long since learned to ignore as a meaningful signal.

This stance is not unique to Google. To date, no major AI company has endorsed the use of these special formats for content discovery on third-party websites. While some AI developers maintain their own llms.txt files, these are for documenting their own APIs and services, not for crawling the broader web. The message from the creators of these AI systems is clear: they are being built to understand the web as it exists for humans, not to rely on specially created machine-only shortcuts.

The Future of AI Search Optimization: A Return to Fundamentals

The fascination with LLM-only pages appears to stem from a misdiagnosis of the problem. The core issue hindering effective AI crawling is often not the content’s format but its fundamental technical accessibility. The true barrier is frequently a heavy reliance on client-side JavaScript, which can be difficult for many bots to render and process correctly. When critical information is hidden behind complex scripts that fail to execute in a crawler’s environment, that content effectively becomes invisible, regardless of how valuable it might be.

Therefore, the recommended path forward involves a strategic reallocation of resources. Instead of building and maintaining separate, bot-only content silos, development efforts should be invested in creating a single, high-quality, and technically sound website that serves both humans and machines effectively. This means returning to the foundational principles of good web development, such as a focus on clean, semantic HTML, robust information architecture that makes content easy to discover, and a preference for server-side rendering to ensure all content is readily available to any user agent, human or machine.

This entire episode with LLM-only pages highlights a recurring pattern in the world of SEO: the perennial temptation to chase algorithm-beating “hacks” over sustainable, user-centric practices. The pursuit of a quick technical fix often distracts from the more challenging but ultimately more rewarding work of creating genuinely valuable experiences. The ultimate strategy for achieving and maintaining visibility in AI-driven search remains perfectly aligned with traditional SEO best practices: create authoritative, useful, and easily accessible content for your target audience.

Conclusion: Quality Over Gimmicks in the AI Era

In the final analysis, the trend of creating LLM-only pages was based on a flawed understanding of how advanced AI systems operate. The empirical data gathered from multiple large-scale studies and the official statements from major platforms like Google confirmed that these special formats offered no inherent advantage for discoverability or citation. The chase for a technical shortcut ultimately led to a dead end.

The most effective and future-proof strategy for AI search optimization was, and remains, a resolute focus on foundational web principles. The companies that succeeded in the long run were those that built technically sound, well-structured websites filled with high-quality, unique content. It became clear that the best page for an AI was simply the best page for a human user, proving that in the new era of AI, quality and accessibility defeated gimmicks.